Hey everyone!

I’m here to share an open-source project I built on GitHub for teams that train AI models with human feedback.

The GitHub Pull Request is accessible here.

I started building this tool back in July 2024 as I was applying for Anthropic’s “Human Feedback Interface” role. It was a really insightful learning experience to apply for one of the best AI companies in the world.

Note: Total development from a completely fresh template on Next.js: 10 days.

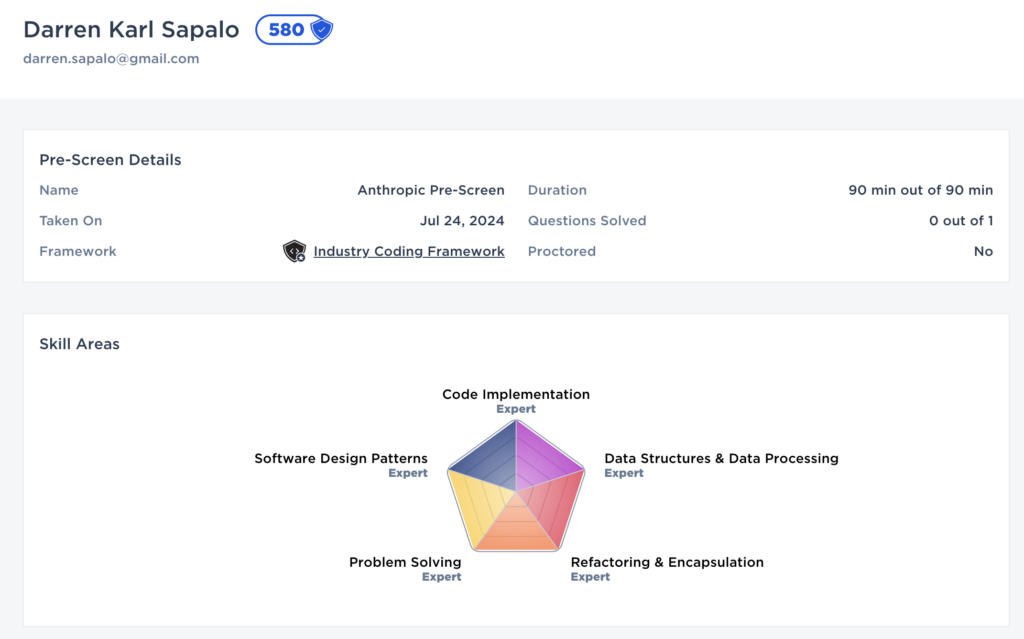

All of the progress on this Git repository spanned between July 18-July 24 (7 days) when I passed the automated technical screening through Code Signal (see below). After graduating in my MBA program at Hult International Business School, I visited Canada in September. I returned back to review the prototype after September 23-September 25 (2 days). I recorded a video summary and tweaked some mobile responsiveness issues on October 3, 2024 (less than an hour).

Instead of working on a human-feedback interface tool from scratch, I instead looked for a research lab that already had built the data annotation tool and decided to revamp the frontend application for it.

In my research I found AllenAI, a Seattle based non-profit AI research institute founded in 2014 by the late Paul Allen. There I found a GitHub repository started last year (2023) by Hamish Ivison called open-instruct, which is their open source effort on instruction-tuning popular, pretrained language models using publicly available datasets.

Opportunity

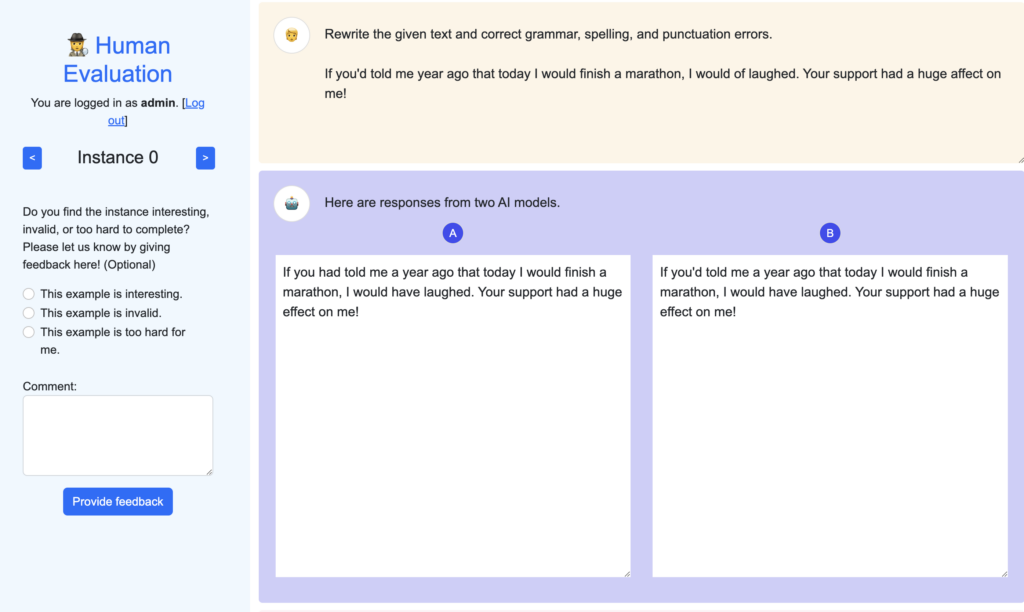

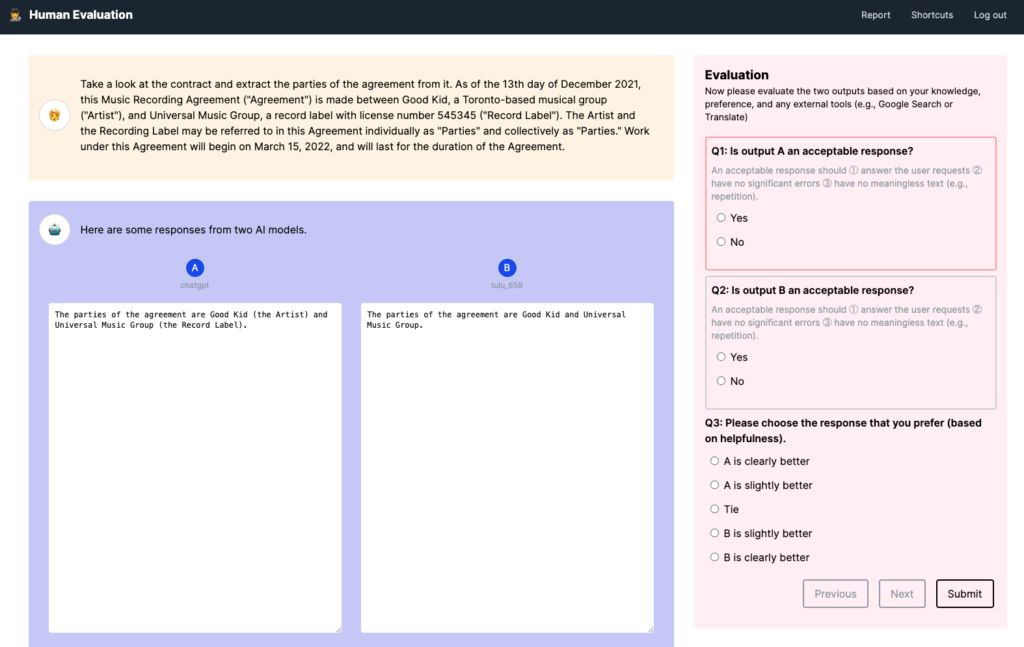

I reviewed their current implementation and found that their web interface was functional but had areas of improvements. It uses a Python Flask backend and a single-file HTML for handling the frontend data labeling.

What I did

I decided to use Next.js, a modern JS framework, for the revised frontend experience.

By leveraging the open-source initiative started a year ago, I was able to focus on demonstrating my frontend development and UX expertise, and also show how I am able to adapt to existing codebases quickly, creating a superior experience in less than a month!

In this system design for a human-feedback interface, I prioritized the following objectives for a short project (1-month): A smooth user experience, operations analytics, and state management.

Objective 1: Smooth User Experience – I need to make sure that non-technical data annotators and researchers can use the website smoothly, so I introduced keyboard shortcuts for annotation, saving data, and moving between different data instances to be labeled! I also made sure that the website was mobile responsive.

Objective 2: Operations Analytics – As a business owner, you need to see how well your research team is performing. With Google Analytics 4, you can answer questions like:

- On alignment and variance: Which AI-generated responses have the most alignment? Which are the most controversial or widely disagreed upon by our annotators?

- On fine-tuning direction: After normalizing by the length of the output, which AI prompts take the longest to evaluate?

These give you insights as a business owner into which aspects of your data you need to analyze further, or spend most of your team’s effort in. If you’re a business owner curious about how these things can be tracked, I’ll go into the specifics of the GA4 implementation in a separate blog post.

- Page View events are triggered when viewing a single data instance,

- Annotation events are triggered with a time duration of how long it takes an annotator to label a specific data, and

- Audiences can be used to observe the variance in annotation performance across different kinds of annotators!

Objective 3: State management – Finally, I decided to build the revamped frontend application using Next.js and RxJS. With React-based components, I want to demonstrate how I modularize and organize code clearly, and these components allow me to demonstrate my expertise in state management. With RxJS, I want to demonstrate how I handle and orchestrate network calls as it integrates with state management.

Overall, this was a fun, highly-active, 1-month project that I’m now able to add to my portfolio work.

All in all, I scoped out a tight roadmap to maximize the number of skills and capabilities I can demonstrate in a short timespan of around 10 days.

Roadmap

- ✅ Complete log in/log out flow

- ✅ Enforce auth-guards on the authenticated routes in next.js

- ✅ Preload data in the

/fine-tuningroute - ✅ Successfully insert data into the

evaluation.db, tested usingpython export_db.py - ✅ Responsiveness check for all pages

- Define metrics for measuring the human feedback transactions. (0%)

- Note: The Allen AI team doesn’t really have a GA4 analytics configured on their initial template. Setting up the event tracking should take less than 15 minutes.

Nice to haves

- ✅ Improved workspace design to make the annotation experience better (e.g. no scrolling needed)

- ✅ Included keyboard shortcuts to rapidly annotate instances.

- ✅ Pressing “?” opens up the keyboard shortcuts.

All code changes are available in this pull request here.